Subtitles & vocabulary

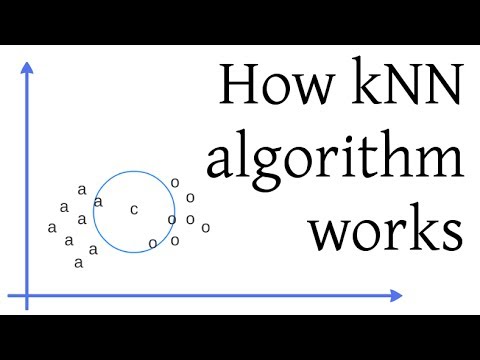

How kNN algorithm works

00

Jarne posted on 2019/11/18Save

Video vocabulary

specific

US /spɪˈsɪfɪk/

・

UK /spəˈsɪfɪk/

- Adjective

- Precise; particular; just about that thing

- Concerning one particular thing or kind of thing

A2

More present

US /ˈprɛznt/

・

UK /'preznt/

- Adjective

- Being in attendance; being there; having turned up

- Being in a particular place; existing or occurring now.

- Noun

- Gift

- Verb tense indicating an action is happening now

A1TOEIC

More multiple

US /ˈmʌltəpəl/

・

UK /ˈmʌltɪpl/

- Adjective

- Having or involving more than one of something

- Having or involving several parts, elements, or members.

- Countable Noun

- Number produced by multiplying a smaller number

- A number of identical circuit elements connected in parallel or series.

B1

More majority

US /məˈdʒɔrɪti, -ˈdʒɑr-/

・

UK /mə'dʒɒrətɪ/

- Noun (Countable/Uncountable)

- Amount that is more than half of a group

- The age at which a person is legally considered an adult.

B1TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters