Subtitles & vocabulary

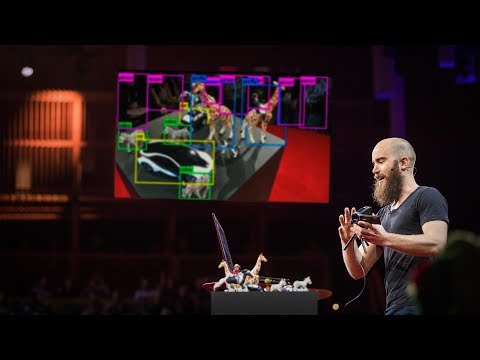

【TED】Joseph Redmon: How computers learn to recognize objects instantly (How computers learn to recognize objects instantly | Joseph Redmon)

00

Caurora posted on 2017/12/10Save

Video vocabulary

stuff

US /stʌf/

・

UK /stʌf/

- Uncountable Noun

- Generic description for things, materials, objects

- Transitive Verb

- To push material inside something, with force

B1

More process

US /ˈprɑsˌɛs, ˈproˌsɛs/

・

UK /prə'ses/

- Transitive Verb

- To organize and use data in a computer

- To deal with official forms in the way required

- Noun (Countable/Uncountable)

- Dealing with official forms in the way required

- Set of changes that occur slowly and naturally

A2TOEIC

More significant

US /sɪɡˈnɪfɪkənt/

・

UK /sɪgˈnɪfɪkənt/

- Adjective

- Large enough to be noticed or have an effect

- Having meaning; important; noticeable

A2TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters