Subtitles & vocabulary

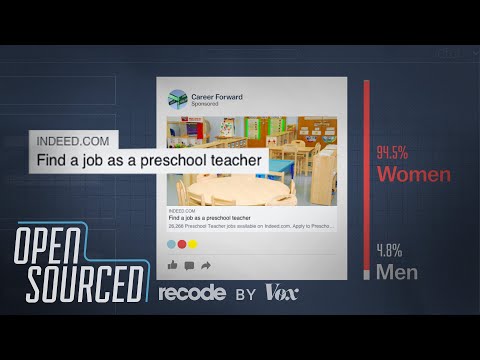

Facebook showed this ad to 95% women. Is that a problem?

00

林宜悉 posted on 2020/07/31Save

Video vocabulary

sort

US /sɔrt/

・

UK /sɔ:t/

- Transitive Verb

- To organize things by putting them into groups

- To deal with things in an organized way

- Noun

- Group or class of similar things or people

A1TOEIC

More relevant

US /ˈrɛləvənt/

・

UK /ˈreləvənt/

- Adjective

- Having an effect on an issue; related or current

A2TOEIC

More audience

US /ˈɔdiəns/

・

UK /ˈɔ:diəns/

- Noun (Countable/Uncountable)

- Group of people attending a play, movie etc.

A2TOEIC

More general

US /ˈdʒɛnərəl/

・

UK /'dʒenrəl/

- Adjective

- Widespread, normal or usual

- Not detailed or specific; vague.

- Countable Noun

- Top ranked officer in the army

A1TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters