Subtitles & vocabulary

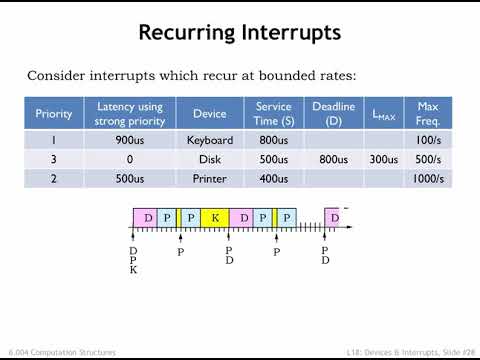

18.2.6 Strong Priorities

00

林宜悉 posted on 2020/03/29Save

Video vocabulary

multiple

US /ˈmʌltəpəl/

・

UK /ˈmʌltɪpl/

- Adjective

- Having or involving more than one of something

- Having or involving several parts, elements, or members.

- Countable Noun

- Number produced by multiplying a smaller number

- A number of identical circuit elements connected in parallel or series.

B1

More routine

US /ruˈtin/

・

UK /ru:ˈti:n/

- Adjective

- Happening or done regularly or habitually

- Always the same; boring through lack of variety

- Noun (Countable/Uncountable)

- Regular or habitual way of behaving or doing

- Series of actions that make up a performance

A2TOEIC

More guarantee

US /ˌɡærənˈti/

・

UK /ˌɡærən'ti:/

- Transitive Verb

- To promise to repair a broken product

- To promise that something will happen or be done

- Countable Noun

- A promise to repair a broken product

- Promise that something will be done as expected

A2TOEIC

More progress

US /ˈprɑɡˌrɛs, -rəs, ˈproˌɡrɛs/

・

UK /'prəʊɡres/

- Verb (Transitive/Intransitive)

- To move forward or toward a place or goal

- To make progress; develop or improve.

- Uncountable Noun

- Act of moving forward

- The process of improving or developing something over a period of time.

A2TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters