Subtitles & vocabulary

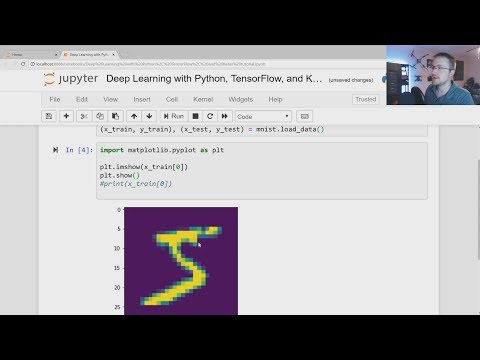

Deep Learning with Python, TensorFlow, and Keras tutorial

00

YUAN posted on 2019/04/08Save

Video vocabulary

sort

US /sɔrt/

・

UK /sɔ:t/

- Transitive Verb

- To organize things by putting them into groups

- To deal with things in an organized way

- Noun

- Group or class of similar things or people

A1TOEIC

More basically

US /ˈbesɪkəli,-kli/

・

UK /ˈbeɪsɪkli/

- Adverb

- Used before you explain something simply, clearly

- In essence; when you consider the most important aspects of something.

A2

More chaos

US /ˈkeˌɑs/

・

UK /'keɪɒs/

- Noun (plural)

- State of utter confusion or disorder

- Uncountable Noun

- Complete disorder and confusion.

- Behavior so unpredictable as to appear random, owing to great sensitivity to small changes in conditions.

B1

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters