Subtitles & vocabulary

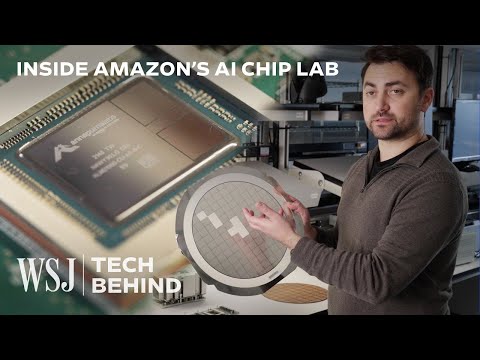

Inside the Making of an AI Chip | WSJ Tech Behind

00

林宜悉 posted on 2024/01/06Save

Video vocabulary

process

US /ˈprɑsˌɛs, ˈproˌsɛs/

・

UK /prə'ses/

- Transitive Verb

- To organize and use data in a computer

- To deal with official forms in the way required

- Noun (Countable/Uncountable)

- Dealing with official forms in the way required

- Set of changes that occur slowly and naturally

A2TOEIC

More essential

US /ɪˈsɛnʃəl/

・

UK /ɪ'senʃl/

- Adjective

- Extremely or most important and necessary

- Fundamental; basic.

- Noun

- A concentrated hydrophobic liquid containing volatile aroma compounds from plants.

B1TOEIC

More instance

US /ˈɪnstəns/

・

UK /'ɪnstəns/

- Noun (Countable/Uncountable)

- An example of something; case

- An occurrence of something.

- Transitive Verb

- To give as an example of something else

A2TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters