Subtitles & vocabulary

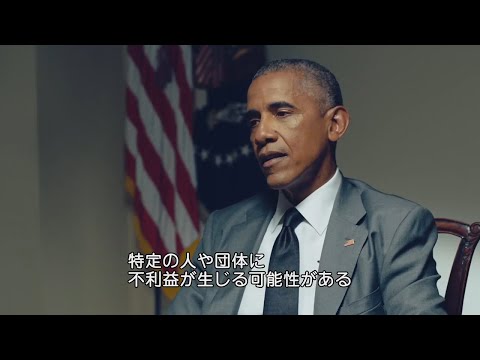

自律走行車が走る世界では | バラク・オバマ×伊藤穰一 | Ep7 | WIRED.jp

00

林宜悉 posted on 2021/07/29Save

Video vocabulary

essentially

US /ɪˈsenʃəli/

・

UK /ɪˈsenʃəli/

- Adverb

- Basically; (said when stating the basic facts)

- Used to emphasize the basic truth or fact of a situation.

A2

More bunch

US /bʌntʃ/

・

UK /bʌntʃ/

- Noun (Countable/Uncountable)

- A group of things of the same kind

- A group of people.

- Transitive Verb

- To group people or things closely together

B1

More instinct

US /ˈɪnˌstɪŋkt/

・

UK /'ɪnstɪŋkt/

- Noun

- Natural way of thinking; intuition

- Natural way (person or animal) thinks, behaves

B1

More figure

US /ˈfɪɡjɚ/

・

UK /ˈfiɡə/

- Verb (Transitive/Intransitive)

- To appear in a game, play or event

- To calculate how much something will cost

- Noun

- Your body shape

- Numbers in a calculation

A1TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters