Subtitles & vocabulary

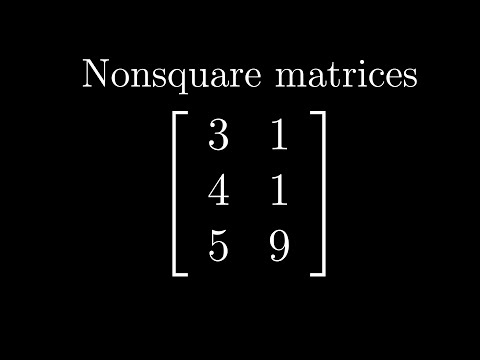

Nonsquare matrices as transformations between dimensions | Essence of linear algebra, chapter 8

00

tai posted on 2021/02/07Save

Video vocabulary

context

US /ˈkɑnˌtɛkst/

・

UK /ˈkɒntekst/

- Noun (Countable/Uncountable)

- Set of facts surrounding a person or event

- The circumstances that form the setting for an event, statement, or idea, and in terms of which it can be fully understood and assessed.

A2

More completely

US /kəmˈpliːtli/

・

UK /kəmˈpli:tli/

- Adverb

- In every way or as much as possible

- To the greatest extent; thoroughly.

A1

More spot

US /spɑt/

・

UK /spɒt/

- Noun

- A certain place or area

- A difficult time; awkward situation

- Transitive Verb

- To see someone or something by chance

A2TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters