Subtitles & vocabulary

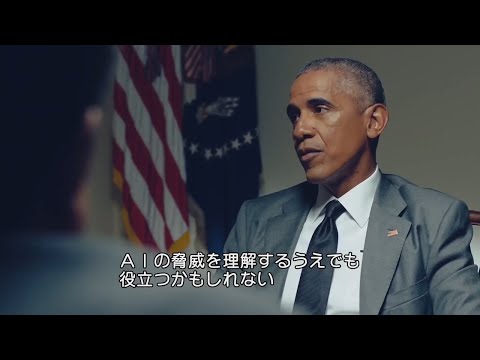

AI時代の国家のセキュリティとは? | バラク・オバマ×伊藤穰一 | Ep3 | WIRED.jp

00

林宜悉 posted on 2020/08/05Save

Video vocabulary

sort

US /sɔrt/

・

UK /sɔ:t/

- Transitive Verb

- To organize things by putting them into groups

- To deal with things in an organized way

- Noun

- Group or class of similar things or people

A1TOEIC

More bunch

US /bʌntʃ/

・

UK /bʌntʃ/

- Noun (Countable/Uncountable)

- A group of things of the same kind

- A group of people.

- Transitive Verb

- To group people or things closely together

B1

More sophisticated

US /səˈfɪstɪˌketɪd/

・

UK /səˈfɪstɪkeɪtɪd/

- Adjective

- Making a good sounding but misleading argument

- Wise in the way of the world; having refined taste

- Transitive Verb

- To make someone more worldly and experienced

B1TOEIC

More figure

US /ˈfɪɡjɚ/

・

UK /ˈfiɡə/

- Verb (Transitive/Intransitive)

- To appear in a game, play or event

- To calculate how much something will cost

- Noun

- Your body shape

- Numbers in a calculation

A1TOEIC

More Use Energy

Unlock Vocabulary

Unlock pronunciation, explanations, and filters